Carlos Arana has been thinking a lot about generative AI lately. Arana is writing Berklee Online’s first four-week course, AI for Music and Audio, and part of the reason that the course is only four weeks long is that the technology the course is based on is evolving so rapidly that he anticipates having to make frequent revisions to the lesson content. In particular, the lesson on generative AI, but we’ll get to that shortly.

The first lesson brings students up to speed on the types of artificial intelligence and the fundamentals of signal processing and music information retrieval. It’s a “how we got here” of sorts, explaining the evolution of AI. The second lesson deals with audio content analysis and source separation, which means using artificial intelligence to extract content from existing music. The third lesson focuses on music production tasks such as mixing and mastering as well as music distribution in streaming platforms using AI.

It’s the final lesson, “Generative AI in Music,” that will likely require the most updates. This lesson is also, to some degree, the most controversial. Generative AI has musicians concerned for their livelihood, and in some cases, for the future of music itself. A recent study by Goldmedia—a European consultancy and research group focused on the media and entertainment industries—found that 64 percent of music creators believe that the risks of AI use outweigh its potential opportunities.

But Dr. Arana, who earned his PhD from University of Buenos Aires (UBA-UNLZ) in 2022, says generative AI is nothing for musicians to be afraid of. The only thing to be afraid of is ignorance.

“Our mission is to give students the largest amount of knowledge that we have on the topic so far, considering the amount of time we have,” he says, “And then once they’ve learned all that there is to know, it will be their turn to make an informed decision.”

When did you first become interested in artificial intelligence in music?

Essentially, it’s something I wanted to investigate for decades, and AI gave me the necessary tools to turn it into a development with tangible results. And then when I went to get my PhD, my thesis was an investigation into the application of AI, specifically Deep Learning and generative neural networks, in the field of music education.

When data science got famous—I’m talking about around 2016, because before that data science was called data mining—I was involved in machine learning. And from there everything happened so fast. What is interesting is that the definitions for different disciplines and techniques are all covered by the term “AI” now. But a couple of years ago we only considered AI to be deep learning or something connected to more complex things. And we got the difference between machine learning, data science, data mining, and all those concepts more applied to enterprise and business solutions.

At that time AI was more related to image recognition, or to language, what is called natural language processing, NLP. And it was more associated with that. It was more of a laboratory/research thing. And from a couple of years to now, everything is AI. And in some sense it’s a good way of naming it because the broad definition of AI is computers or programs that emulate human reasoning and the human decision-making processes. So, that’s quite a precise definition of AI.

That’s really interesting. I never really put two and two together that the practice of data mining is what led to this. And you teach Liv Buli’s Data Analytics in the Music Business course as well, which also makes perfect sense. You’ve met her, right?

Absolutely, yes.

She’s awesome! I’m a big fan.

Yes, she’s awesome! It’s been an incredible experience because her view—she’s a journalist like you—is obviously data-centric and data-driven, because that’s the scope of that class, but the way I tend to orient students is with this basic premise that everything you affirm, or that you state, has got to be backed by data. So, you cannot say “we’ve seen impressive growth with this artist,” or “this artist is doing well in this place.” You have to support that by telling me the benchmark to which you are comparing and showing that it’s better, or show me the evolution with a curve, with a visualization, with a graph, with a table, whatever.

And students are very happy because I always stress that point, and they find it very useful because they have to justify everything with data. And actually the AI models that are working best now, or are performing better than any others, are the ones that emulate human intelligence based on data, based on the training of lots of cases where we know the variables.

So there are two types of AI: The classical ones where you emulate human intelligence with rule-based and probabilistic procedures or algorithms. So, you have to be very clear on writing or on determining which are the rules or probabilistic distributions that are capturing all the knowledge from an expert, and they are called expert systems, where you want to formalize all that knowledge in a predetermined set of rules that are rule-based models or expert systems models. That’s the classical approach to AI.

And then the second generation of AI models is the one that is famous now because of its performance. It’s the one where you model all that knowledge instead of using rules or a probability distribution. And what the model is doing is learning, which is the pattern that relates the inputs and the output. And what we have to do is understand what is happening in between, that before it’s the expert knowledge that we replace with rules.

So, that’s the big difference. One is rule-based and the other one is data-driven and data-based. So, those are the two main types of artificial intelligence. The ones that are more famous now obviously are the second ones.

As a musician—independent of your role as the author of the AI for Music and Audio course—what types of artificial intelligence do you find yourself using the most?

I have 30 years of experience as a music professional, and what I found is that it helped me, for instance, in very precise and specific tasks. For instance, I have a recording I did in the past where I didn’t have the stems, and I needed to remix it. So I extracted the vocals because specifically we wanted to do some modifications on the vocals. So, we extracted with Moises.ai, using the premium version, where you have the best quality. And then we were able to modify that to what we needed to do. That was an actual application that was impossible to be done a couple of years ago.

Then what I do now is that I have to send my tracks to a producer who then prepares the final arrangement. So, I deliver to this producer eight different versions of my guitar playing: One more rhythmic, one with arrangements, one with feel, a solo one, etc. And what I’ve found now is that I can use iZotope Neutron, for instance, I pick some tracks by João Gilberto—one of my guitar heroes from the Bossa Nova genre—I then use them as a reference to adapt the sound of my guitar recordings to match that EQ and balance.

So, I am remixing, reprocessing, post-processing my guitar track using an intelligent mixing procedure. That is mixing by reference, it’s called. Because you use a reference audio file and you match their spectrogram.

And speaking of the applications, I’m also mastering some stuff that I have from the past.

I really do like how convenient AI is for mastering. It’s just the perfect solution for when I have a track that I don’t necessarily need to release commercially, but I want to at least sound better than what I could do with just mixing down from my DAW.

Obviously. It’s incredible!

There has been some backlash against musicians using AI, which I hope we can discuss shortly, but before we get into that, tell me about your own experience with generative AI, which is really the source of most of the controversy.

Lately, I’ve been using generative AI tools more for arranging than composing. Let me share something that’s yielding results when writing arrangements for a brass section. In this process, I utilize style transfer models. First, I sample the sound of a specific instrument, for example, an alto saxophone, which I extract from recordings that appeal to me using a source separation app or directly from a sound repository. Then, I record the lines for that instrument using my guitar. The style transfer app then generates the same melodic line but with the sound of the saxophone I sampled before! This way, I proceed with the different instruments I decide to include in the brass section and assemble the various voices of the arrangement, which I then convert to MIDI to send to the music notation software. For the first time in my life, I show up to the band’s rehearsals with the scores of exactly what I want the saxophonist, the trumpet player, or any other musicians for whom I’ve written the arrangement to play.

But I also sometimes use generative AI tools for these arrangements when I want to explore more ideas. I use a mode from the generative AI app called “continue,” which allows me to input a musical idea and have the app generate different variants to continue with that melody I entered. The great thing is that it generates the melodies based on the harmony you input, so I’ve often used this function and chosen something from there. It might not always be the complete melody generated by the app, but rather some fragment that sounded interesting to me. Overall, these generative AI-generated melodies have played a role in giving me more ideas or helping me overcome a creative block.

SIGN UP FOR AI FOR MUSIC AND AUDIO WITH CARLOS ARANA NOW!

So, for a professional songwriter or arranger, depending on the situation, these tools can be indispensable. But then you take somebody who is a beginner songwriter using that same program, and they will depend on that so much more. In my case I’m a professional or an experienced musician, so it will simply suggest ideas for me. It’s not going to automatize that particular process.

So, there are different levels of control that I call out in the course. The first one is more insight-based. And the second one is where the model can suggest ideas for you; it’s telling you that you have all these options. And the third one is the automated or the pilot. It’s not a co-pilot, it’s the actual pilot, driving the car by itself.

And this notion of the car driving itself is exactly what seems to irk artists and musicians the most, the fear that art and music will have no soul if everybody is just making it with the aid of artificial intelligence. How do you address that in the course?

The essential mission of this course is to, as much as possible, lift the veil and demystify the AI models and tools. The goal is to teach students how to coexist with current AI tools to enhance and increase the productivity and efficiency of their music-related activities and workflow. Take the necessary actions to adapt and coexist with tools that are offered in the market in due time, with the fundamental goal of benefiting from them, or at the very least to be aware of their existence, utility, and scope. And to be prepared and trained to receive, interact with, control, and benefit from the tools and apps and plug-ins that will undoubtedly be developed and introduced to the market at a rapid pace in the coming years.

So, if they are telling me that they are afraid they will be excluded because of AI, we can debate that, asking them if they know what these tools can give them. “Do you know what these tools, the utility, the features, the scope of these tools, the quality of the outcome of these tools?” So, from there on we can continue debating.

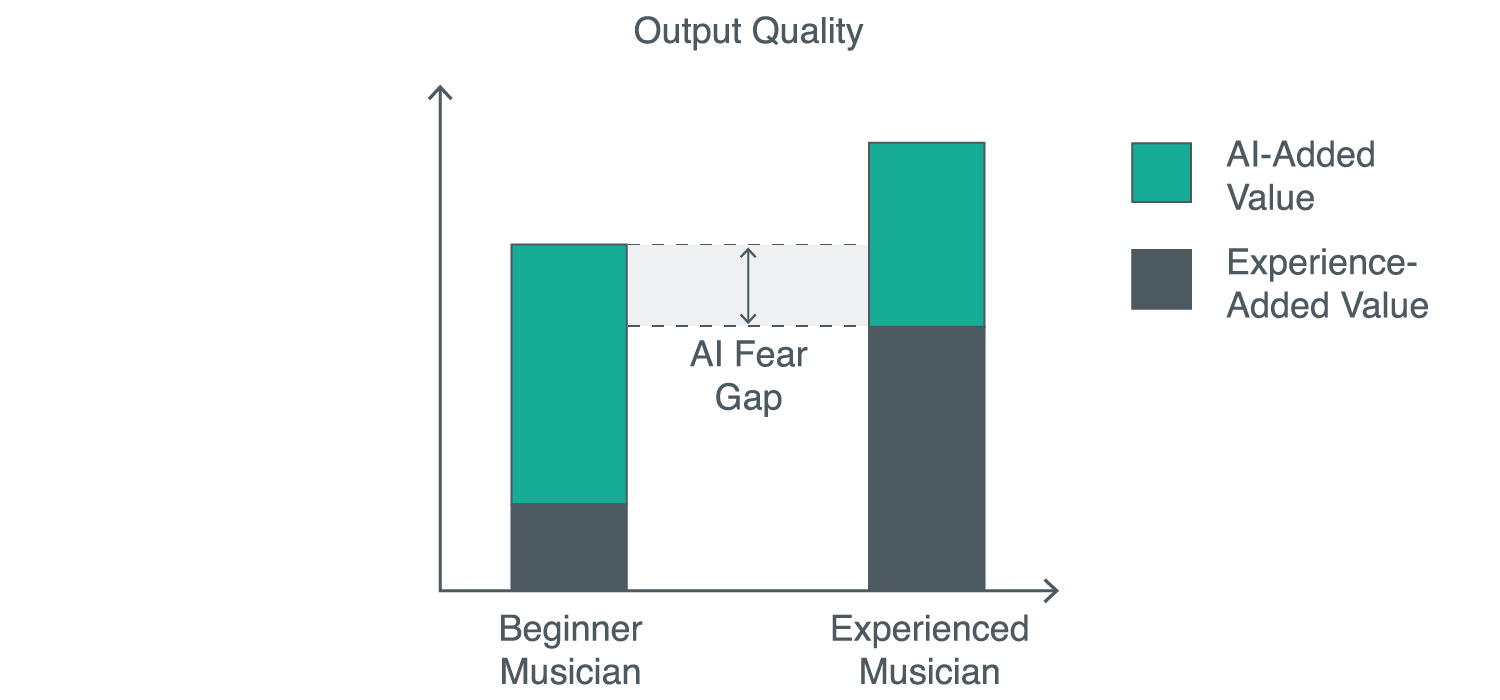

I have a graph that I like to share when discussing AI. I showed it to Liv Buli and she was so delighted.

On the left is what the beginner knows, represented in black. On the right is what the non-beginner or the professional knows, also represented in black. In green for both is the added value in AI. For a beginner it’s going to be much higher than their original expertise value or knowledge. On the Y axis is the output quality or level.

The experienced musician knows a lot more, obviously. But if the experienced musician is not using AI, there’s a risk that a beginner musician could surpass their output quality. See how the green in the beginner bar is taller than the black in the experienced bar? It seems to me that all of the doomers’ comments are associated with this gap that’s in the middle of these two bars.

I call it “the AI fear gap,” because you have fear of that gap, that if you do not use these tools, beginners are going to generate better quality work than yours. But that’s a fear that you can work on if you start using these tools.

If an experienced musician starts using AI, they can add this value to their work and they will always be confident and satisfied with their productions or creations. And they will always be ahead, or their contribution and their added value would be considered and recognized. So, this is the chart that in some sense summarizes my intention with the course, to raise the bar with everybody’s work.

On a personal level, have you been surprised by people who react strongly to AI in music?

When something revolutionary comes along, some people will always react. Some analogies that could work is that when electronic drums showed up and then when DAWs showed up, people were nervous they were going to replace drummers and mixing engineers. The difference is that at that time we didn’t have social media, so we couldn’t actually see what all the doomers had to say.

But let’s think of current AI tools in music and how long they’ve been here. Mixing and mastering AI tools, for instance, namely iZotope Neutron and Landr, how long have they been here?

A couple of years at least, right?

What was the reaction of the mixing and mastering engineers or student community to the launching of those apps or plug-ins? It was something that at the beginning I’m pretty sure was “Hey, they’re going to take our place, blah, blah.” And the community and the working force of this, they adapted and everyone is using this now.

But people still say “I’m going to take a course here from a very well known mixing engineer because I want to get more knowledge.” Because people are still trying to understand what’s going on with the mix, knowing the traditional approach and then deciding that they need to have the knowledge for them having the added value of AI.

So, this is going to be the same, and actually the stage of generative AI apps and plug-ins, the increment in terms of quality and level of the output related to, it’s not the same degree of improvement as you have with mixing and mastering tools. So, you get the idea.

So, the community was able to survive the emergence of these mixing and mastering AI products, why wouldn’t we be able to do the same with generative AI?

It’s more like a tool for increasing efficiency, like the examples I was telling you before.

But we have to be aware that maybe someday if we are distracted, professional musicians might be as surprised as writing professionals were surprised with ChatGPT. So, that’s my main idea: Knowledge of uses, features, contributions, do not be afraid, because the worst part has already been accepted. That is music content extraction and production tools. That has been accepted already. No one is debating the usage of mastering with Landr, or mixing with iZotope Neutron, or extracting content with AI.

For instance, here’s one example: I did the transcriptions for the most important record in bossa nova history, the Getz/Gilberto album. I did the complete scores transcription. João Gilberto’s guitar and vocal parts, Antonio Carlos Jobim’s piano parts, and Stan Getz’s solos, man! I did it all by ear! In the final mix of that record, obviously, all the instrumentalists are playing together, and I was hearing that. I had to transcribe each note from Stan Getz’s amazing and complex solos, and also each stroke on the hi-hat: chits, chits, chits, chits. Wow! Days and days of hearing sax solos, guitars, vocals, bass and hi-hats in between all the mix. If you hire me for that job, with AI, I’ll be able to do it in a twentieth of the time, because I will isolate each instrument, and then transcribe it. So, all of that is here already. The only thing that people are frightened about is generative AI, and the advancements in generative AI are small compared to the advancements in natural language AI.

I think I told you the other day about how I will sometimes use ChatGPT, especially when I need some spark for marketing copy, and every single time it spits out the word “unleash” to me. And I have to say, “stop using ‘unleash’!” My associates and I basically liken ChatGPT to an overeager intern. Sure, it does the tasks very quickly, but it almost always requires work on our part to finesse it. But it definitely does help with brainstorming.

That’s the exact point! You are a journalist, a professional, you’ve been writing for years, and you know your profession and what you’re doing, because you’ve been trained. You have the trial-and-error expertise that gives you your professional career, and that is not going to be replaced by a machine. Even though they have scraped all of the internet, even having that insane amount of processing, they are not able to generate something that a professional will say, “oh, this is a fantastic piece of work.” It can surprise you sometimes because you think, “wow, this is done by a machine?” But if you have to write a professional article, it’s going to help you. For instance, the summarizing of stuff for me is cool. Yesterday I wrote all of a speech I was going to say in one page and then I said, “give me 12 bullets for this.”

Many of them were great, but obviously I have to polish a lot of them. But what they gave me was the kickoff, which made it much easier afterwards. But that’s what it does: enhances, and helps you. So, that’s exactly the same with generative AI for music. But we have to know the extent, we have to know the features, we have to know how they are developed, trained, to get the ethical concerns and issues.

So, our intention with this class is developing that maturity that you already have with ChatGPT. We already have the experience with a monster that is much bigger than the one that we have in music. That is ChatGPT, because they have virtually all the human knowledge collected!

So, that’s our mission here, Pat, to try to emulate that maturity that you already have after interacting and coexisting with ChatGPT for 15 months. That’s what we have to do with music here at Berklee. That’s our mission.

Right, so you mention that generative AI is not quite there with scraping the whole history of human input with music yet, but explain to me how you approach the ethical considerations of AI and generative AI in the course.

Indeed, unlike text (where large companies such as Google, Meta, OpenAI-Microsoft, and others have managed to scrape the entire web, accessing the vast majority of human knowledge in text form) and images, of which immense quantities have also been compiled—since tracking their copyright is much more elusive—the process for generative AI in music has not been as exhaustive. This is due to the greater control exerted by companies and institutions responsible for safeguarding copyright rights. As we well know, AI models and applications require vast amounts of data for their training, referred to as “datasets” in AI jargon, with the samples in the case of music being the songs, the majority of which are protected by copyright laws. This is what I meant by drawing the distinction, encouraging the music industry as a whole to become aware of this in order to, in some way, frame the development of music generative AI models.

Copyright laws provide crucial protection for the creators of original musical compositions and lyrics, offering them exclusive rights to reproduce, distribute, perform, and display their work publicly. These laws are designed to foster creativity and innovation by ensuring creators can control and financially benefit from their creations. The debate around the use of deep learning models in music highlights the pressing issue of whether AI-generated music can be protected under copyright law and, if so, who holds the authorship. The overarching goal is to craft a balanced approach that respects both human and AI contributions to the creative process, ensuring copyright law continues to protect innovation and creativity in the music industry.

Practical implications include the risks of inadvertent copyright infringement by AI systems and the necessity for AI engineers to implement safeguards against such violations. Crucially, it is essential to understand to what extent a work generated with AI can be attributed to the creativity of a human being, the program running behind the AI app, and the songs of the “dataset” used to train the model operating behind the scenes in the app.

Navigating transparency can serve as a form of empowerment for artists and listeners by providing them with detailed information on the extent of AI’s involvement in music creation. YouTube just announced a way for video creators to self-label if their content is AI-based. This is likened to food product labeling, where consumers are informed about the ingredients used. The complexities of achieving such transparency include breaking down the music creation process to reveal AI’s role. Furthermore, the requirement for extensive documentation imposes a significant burden, especially on emerging artists without substantial support.

Moreover, the reliance on extensive datasets for training AI models introduces the risk of perpetuating existing societal biases. These biases, when encoded into AI systems, can lead to discriminatory outcomes, underscoring the need for engineers to critically evaluate the data sources and methodologies they employ in AI development, ensuring a fair and unbiased approach to AI-generated music.

Basically, the integration of AI into music creation presents both opportunities and challenges. It prompts a global debate on adapting copyright laws to technological advancements, emphasizing the need for ethical principles, transparency, and efforts to mitigate negative impacts. As AI continues to influence the creative arts, ongoing dialogue, research, and policy development are essential to ensure it serves as a force for good, enhancing creative arts without compromising ethical standards or societal values. This comprehensive approach is critical in harnessing the potential of AI in music creation, ensuring a sustainable and ethically responsible future for the intersection of technology and the arts.

SIGN UP FOR AI FOR MUSIC AND AUDIO WITH CARLOS ARANA NOW!